一、参考

TensorFlow中文社区: http://www.tensorfly.cn/tfdoc/tutorials/mnist_beginners.html

MNIST是一个入门级的计算机视觉数据集,它包含各种手写数字图片:

它也包含每一张图片对应的标签,告诉我们这个是数字几。比如,上面这四张图片的标签分别是5,0,4,1。

在此教程中,我们将训练一个机器学习模型用于预测图片里面的数字。我们的目的不是要设计一个世界一流的复杂模型 -- 尽管我们会在之后给你源代码去实现一流的预测模型 -- 而是要介绍下如何使用TensorFlow。所以,我们这里会从一个很简单的数学模型开始,它叫做Softmax Regression。

对应这个教程的实现代码很短,而且真正有意思的内容只包含在三行代码里面。但是,去理解包含在这些代码里面的设计思想是非常重要的:TensorFlow工作流程和机器学习的基本概念。因此,这个教程会很详细地介绍这些代码的实现原理。

二、GitHub源码分享

https://github.com/jxq0816/tensorflow-mnist

三、MNIST数据集

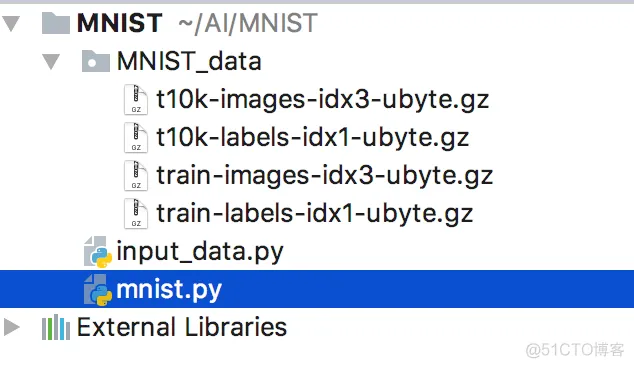

input_data.py文件由谷歌提供,用于下载MNIST相关数据集,需要VPN才可以访问

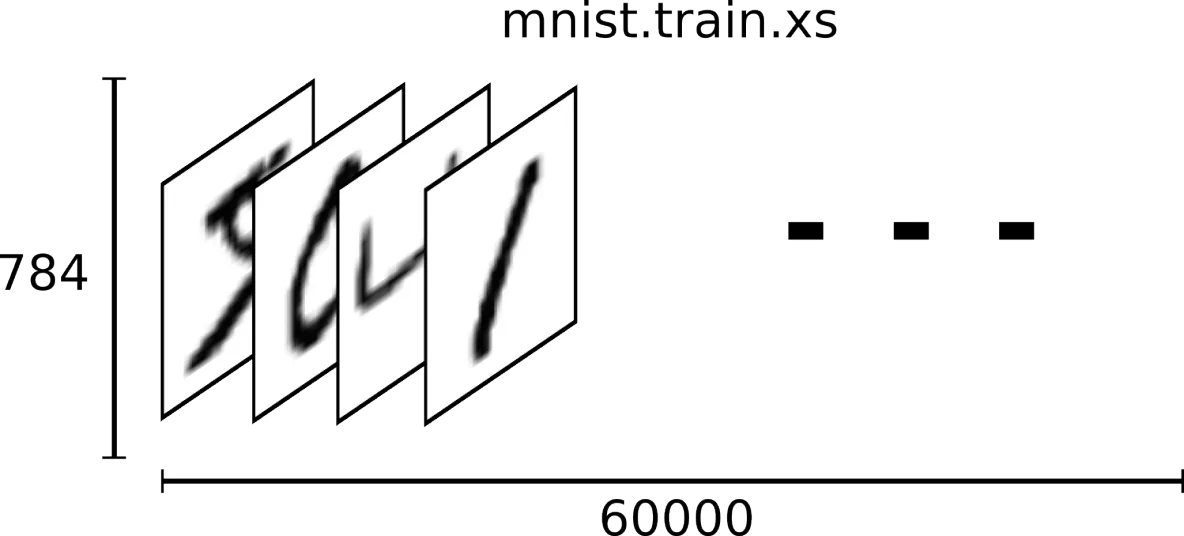

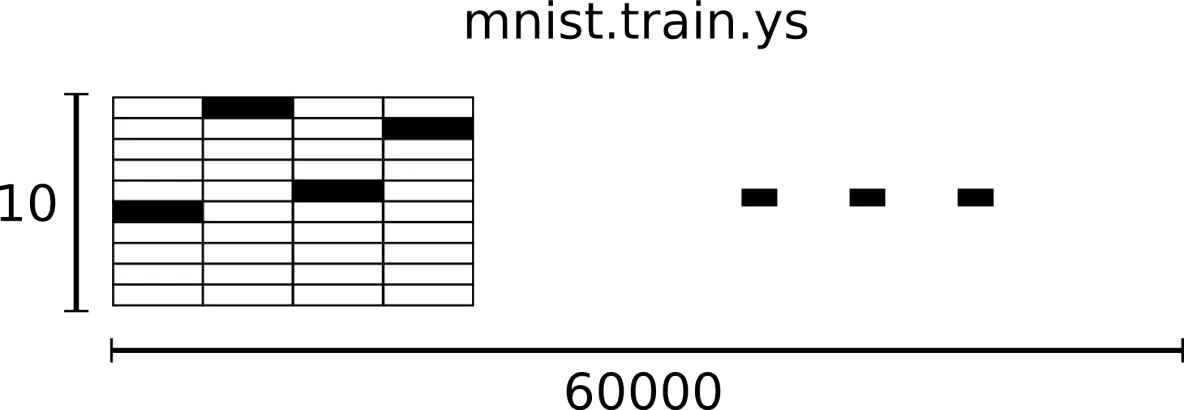

下载下来的数据集被分成两部分:60000行的训练数据集(mnist.train)和10000行的测试数据集(mnist.test)。这样的切分很重要,在机器学习模型设计时必须有一个单独的测试数据集不用于训练而是用来评估这个模型的性能,从而更加容易把设计的模型推广到其他数据集上(泛化)。正如前面提到的一样,每一个MNIST数据单元有两部分组成:一张包含手写数字的图片和一个对应的标签。我们把这些图片设为“xs”,把这些标签设为“ys”。训练数据集和测试数据集都包含xs和ys,比如训练数据集的图片是 mnist.train.images ,训练数据集的标签是 mnist.train.labels。

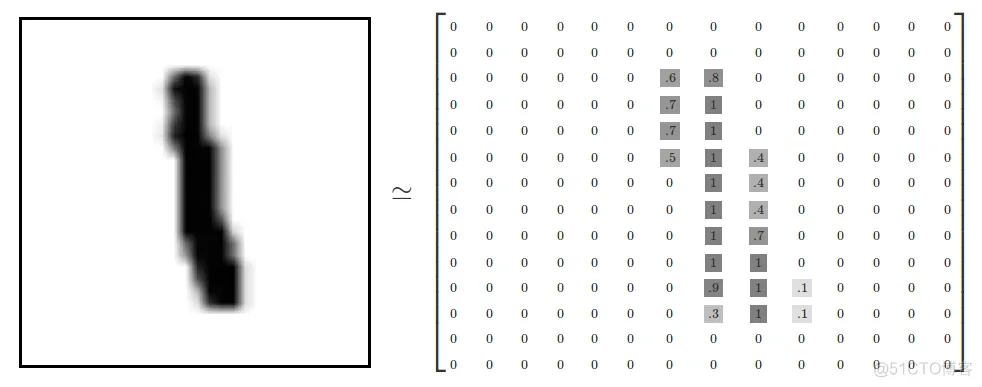

每一张图片包含28像素X28像素。我们可以用一个数字数组来表示这张图片:

我们把这个数组展开成一个向量,长度是 28x28 = 784。如何展开这个数组(数字间的顺序)不重要,只要保持各个图片采用相同的方式展开。从这个角度来看,MNIST数据集的图片就是在784维向量空间里面的点, 并且拥有比较 复杂的结构 (提醒: 此类数据的可视化是计算密集型的)。

展平图片的数字数组会丢失图片的二维结构信息。这显然是不理想的,最优秀的计算机视觉方法会挖掘并利用这些结构信息,我们会在后续教程中介绍。但是在这个教程中我们忽略这些结构,所介绍的简单数学模型,softmax回归(softmax regression),不会利用这些结构信息。

因此,在MNIST训练数据集中,mnist.train.images 是一个形状为 [60000, 784] 的张量,第一个维度数字用来索引图片,第二个维度数字用来索引每张图片中的像素点。在此张量里的每一个元素,都表示某张图片里的某个像素的强度值,值介于0和1之间。

相对应的MNIST数据集的标签是介于0到9的数字,用来描述给定图片里表示的数字。为了用于这个教程,我们使标签数据是"one-hot vectors"。 一个one-hot向量除了某一位的数字是1以外其余各维度数字都是0。所以在此教程中,数字n将表示成一个只有在第n维度(从0开始)数字为1的10维向量。比如,标签0将表示成([1,0,0,0,0,0,0,0,0,0,0])。因此, mnist.train.labels 是一个 [60000, 10] 的数字矩阵。

# Copyright 2015 Google Inc. All Rights Reserved.## Licensed under the Apache License, Version 2.0

(the "License");# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

#WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#"""Functions for downloading and reading MNIST data."""from __future__ import absolute_importfrom

__future__ import divisionfrom __future__ import print_functionimport gzipimport osimport tensorflow.python

.platformimport numpyfrom six.moves import urllibfrom six.moves import xrange

# pylint: disable=redefined-builtinimport tensorflow as tfSOURCE_URL =

'http://yann.lecun.com/exdb/mnist/'def maybe_download(filename, work_directory):

"""Download the data from Yann's website, unless it's already here."""

if not os.path.exists(work_directory): os.mkdir(work_directory)

filepath = os.path.join(work_directory, filename) if not os.path.exists(filepath):

filepath, _ = urllib.request.urlretrieve(SOURCE_URL + filename, filepath)

statinfo = os.stat(filepath) print('Successfully downloaded',

filename, statinfo.st_size, 'bytes.') return filepathdef _read32(bytestream):

dt = numpy.dtype(numpy.uint32).newbyteorder('>') return numpy.frombuffer(bytestream.read(4),

dtype=dt)[0]def extract_images(filename): """Extract the images into a 4D uint8 numpy array

[index, y, x, depth].""" print('Extracting', filename) with gzip.open(filename) as bytestream:

magic = _read32(bytestream) if magic != 2051: raise ValueError(

'Invalid magic number %d in MNIST image file: %s' % (magic, filename))

num_images = _read32(bytestream) rows = _read32(bytestream) cols = _read32(bytestream)

buf = bytestream.read(rows * cols * num_images) data = numpy.frombuffer(buf, dtype=numpy.uint8)

data = data.reshape(num_images, rows, cols, 1) return datadef dense_to_one_hot(labels_dense,

num_classes=10): """Convert class labels from scalars to one-hot vectors."""

num_labels = labels_dense.shape[0] index_offset = numpy.arange(num_labels) *

num_classes labels_one_hot = numpy.zeros((num_labels, num_classes))

labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1

return labels_one_hotdef extract_labels(filename, one_hot=False):

"""Extract the labels into a 1D uint8 numpy array [index]."""

print('Extracting', filename) with gzip.open(filename) as bytestream:

magic = _read32(bytestream) if magic != 2049: raise ValueError(

'Invalid magic number %d in MNIST label file: %s' % (magic, filename))

num_items = _read32(bytestream) buf = bytestream.read(num_items)

labels = numpy.frombuffer(buf, dtype=numpy.uint8) if one_hot:

return dense_to_one_hot(labels) return labelsclass DataSet(object):

def __init__(self, images, labels, fake_data=False, one_hot=False,

dtype=tf.float32): """Construct a DataSet. one_hot arg is used only if fake_data is true.

`dtype` can be either `uint8` to leave the input as `[0, 255]`, or `float32` to rescale into `

[0, 1]`. """ dtype = tf.as_dtype(dtype).base_dtype if dtype not in (tf.uint8, tf.float32):

raise TypeError('Invalid image dtype %r, expected uint8 or float32' %

dtype) if fake_data: self._num_examples = 10000 self.one_hot = one_hot else:

assert images.shape[0] == labels.shape[0], ( 'images.shape: %s labels.shape: %s' % (images.shape,

labels.shape)) self._num_examples = images.shape[0]

# Convert shape from [num examples, rows, columns, depth]

# to [num examples, rows*columns] (assuming depth == 1)

assert images.shape[3] == 1 images = images.reshape(images.shape[0],

images.shape[1] * images.shape[2]) if dtype == tf.float32:

# Convert from [0, 255] -> [0.0, 1.0]. images = images.astype(numpy.float32)

images = numpy.multiply(images, 1.0 / 255.0) self._images = images self._labels = labels

self._epochs_completed = 0 self._index_in_epoch = 0 @property def images(self):

return self._images @property def labels(self): return self._labels @property def num_examples(self):

return self._num_examples @property def epochs_completed(self):

return self._epochs_completed def next_batch(self, batch_size, fake_data=False):

"""Return the next `batch_size` examples from this data set.""" if fake_data:

fake_image = [1] * 784 if self.one_hot: fake_label = [1] + [0] * 9 else:

fake_label = 0 return [fake_image for _ in xrange(batch_size)], [

fake_label for _ in xrange(batch_size)] start = self._index_in_epoch

self._index_in_epoch += batch_size if self._index_in_epoch > self._num_examples:

# Finished epoch self._epochs_completed += 1 # Shuffle the data

perm = numpy.arange(self._num_examples) numpy.random.shuffle(perm)

self._images = self._images[perm] self._labels = self._labels[perm]

# Start next epoch start = 0 self._index_in_epoch = batch_size

assert batch_size <= self._num_examples end = self._index_in_epoch

return self._images[start:end], self._labels[start:end]def read_data_sets(train_dir, fake_data=False,

one_hot=False, dtype=tf.float32): class DataSets(object): pass data_sets = DataSets()

if fake_data: def fake(): return DataSet([], [], fake_data=True, one_hot=one_hot, dtype=dtype)

data_sets.train = fake() data_sets.validation = fake() data_sets.test = fake()

return data_sets TRAIN_IMAGES = 'train-images-idx3-ubyte.gz'

TRAIN_LABELS = 'train-labels-idx1-ubyte.gz' TEST_IMAGES = 't10k-images-idx3-ubyte.gz'

TEST_LABELS = 't10k-labels-idx1-ubyte.gz' VALIDATION_SIZE = 5000

local_file = maybe_download(TRAIN_IMAGES, train_dir) train_images = extract_images(local_file)

local_file = maybe_download(TRAIN_LABELS, train_dir) train_labels = extract_labels(local_file,

one_hot=one_hot) local_file = maybe_download(TEST_IMAGES, train_dir)

test_images = extract_images(local_file) local_file = maybe_download(TEST_LABELS, train_dir)

test_labels = extract_labels(local_file, one_hot=one_hot) validation_images =

train_images[:VALIDATION_SIZE] validation_labels = train_labels[:VALIDATION_SIZE]

train_images = train_images[VALIDATION_SIZE:] train_labels = train_labels[VALIDATION_SIZE:]

data_sets.train = DataSet(train_images, train_labels, dtype=dtype) data_sets

.validation = DataSet(validation_images, validation_labels,

dtype=dtype) data_sets.test = DataSet(test_images, test_labels, dtype=dtype)

return data_sets1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27

.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57

.58.59.60.61.62.63.64.65.66.67.68.69.70.71.72.73.74.75.76.77.78.79.80.81.82.83.84.85.86.87.

88.89.90.91.92.93.94.95.96.97.98.99.100.101.102.103.104.105.106.107.108.109.110.111.112.113.

114.115.116.117.118.119.120.121.122.123.124.125.126.127.128.129.130.131.132.133.134.135.136.

137.138.139.140.141.142.143.144.145.146.147.148.149.150.151.152.153.154.155.156.157.158.159.

160.161.162.163.164.165.166.167.168.169.170.171.172.173.174.175.176.177.178.179.180.181.四、训练MNIST数据集

1、mnist.py

#coding=utf-8import tensorflow as tfimport input_data#---------------------------

定义变量-------------------------------------# 通过操作符号变量来描述这些可交互的操作单元

# x一个占位符placeholder,我们在TensorFlow运行计算时输入这个值# 我们希望能够输入任意数量的MNIST图像,

每一张图展平成784维的向量,我们用2维的浮点数张量来表示这些图# 这个张量的形状是[None,784]

(这里的None表示此张量的第一个维度可以是任何长度的)print("define model variable ");

x = tf.placeholder("float", [None, 784])# 一个Variable代表一个可修改的张量,

存在在TensorFlow的用于描述交互性操作的图中# 它们可以用于计算输入值,也可以在计算中被修改

# 对于各种机器学习应用,一般都会有模型参数,可以用Variable表示。# W:权重

# 注意,W的维度是[784,10],因为我们想要用784维的图片向量乘以它以得到一个10维的证据值向量,每一位对应不同数字类。

W = tf.Variable(tf.zeros([784,10]))# b:偏移量# b的形状是[10],所以我们可以直接把它加到输出上面

b = tf.Variable(tf.zeros([10]))#---------------------------定义模型-------------------------------------

print("define model ");# 用tf.matmul(X,W)表示x乘以W# 这里x是一个2维张量拥有多个输入# 然后再加上b,

把和输入到tf.nn.softmax函数里面# 一行代码来定义softmax回归模型,y 是我们预测的概率分布y = tf.nn.softmax(tf.matmul(x,W)

+ b)#---------------------------训练模型-------------------------------------

print("define train model variable ");# y' 是实际的概率分布,添加一个新的占位符用于输入正确值y_ =

tf.placeholder("float", [None,10])#计算交叉熵,交叉熵是用来衡量我们的预测用于描述真相的低效性

cross_entropy = -tf.reduce_sum(y_*tf.log(y))#用梯度下降算法(gradient descent algorithm)

以0.01的学习速率最小化交叉熵train_step = tf.train.GradientDescentOptimizer(0.01)

.minimize(cross_entropy)#初始化我们创建的变量init = tf.global_variables_initializer()sess = tf

.Session()sess.run(init)mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

print("start to train model")for i in range(1000): #print(i)

# 该循环的每个步骤中,我们都会随机抓取训练数据中的100个批处理数据点,然后我们用这些数据点作为参数替换之前的占位符来运行train_step

batch_xs, batch_ys = mnist.train.next_batch(100) sess.run(train_step,

feed_dict={x: batch_xs, y_: batch_ys})# ---------------------------评估模型-------------

print("review model")correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))accuracy = tf

.reduce_mean(tf.cast(correct_prediction, "float"))print(sess.run(accuracy, feed_dict={x: mnist.test

.images, y_: mnist.test.labels}))1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26

.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.2、运行结果

这个最终结果值应该大约是91%。

这个结果好吗?嗯,并不太好。事实上,这个结果是很差的。这是因为我们仅仅使用了一个非常简单的模型。不过,做一些小小的改进,我们就可以得到97%的正确率。最好的模型甚至可以获得超过99.7%的准确率!

免责声明:本文系网络转载或改编,未找到原创作者,版权归原作者所有。如涉及版权,请联系删