软件

产品

import tensorflow as tfdef weight_variable(shape): initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)def bias_variable(shape): initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)# strides=[batch, height, width, channels]def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')# ksize=[batch, height, width, channels]

def max_pool_2x2(x): return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')def conv_layer(input, shape): W = weight_variable(shape)

b = bias_variable([shape[3]]) return tf.nn.relu(conv2d(input, W) + b)def full_layer(input, size):

in_size = int(input.get_shape()[1]) W = weight_variable([in_size, size]) b = bias_variable([size])

return1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.from tensorflow.examples.tutorials.mnist import input_dataimport tensorflow as tfimport numpy as npfrom

layers import conv_layer, max_pool_2x2, full_layerDATA_DIR = '/tmp/data

'MINIBATCH_SIZE = 50STEPS = 5000mnist = input_data.read_data_sets(DATA_DIR,

one_hot=True)x = tf.placeholder(tf.float32, shape=[None, 784])y_ = tf

.placeholder(tf.float32, shape=[None, 10])# -1 autox_image = tf

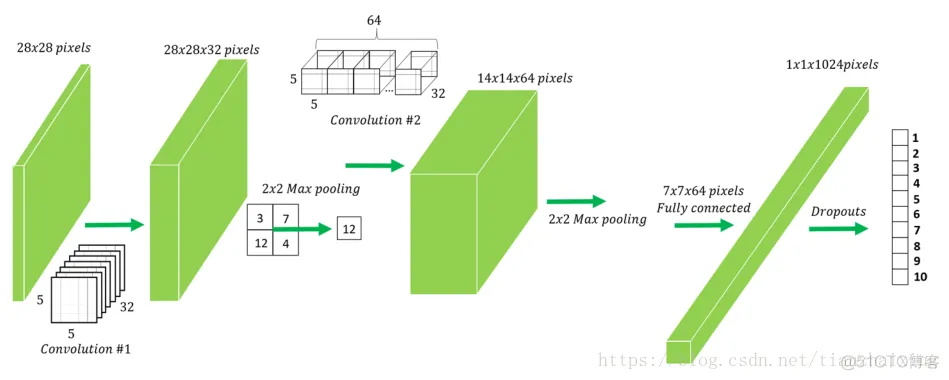

.reshape(x, [-1, 28, 28, 1])# 5x5 1 channel 32 feature mapsconv1 = conv_layer(x_image,

shape=[5, 5, 1, 32])conv1_pool = max_pool_2x2(conv1)# 5x5 32

channels 64 feature mapsconv2 = conv_layer(conv1_pool,

shape=[5, 5, 32, 64])conv2_pool = max_pool_2x2(conv2)

# -1 auto# 28 -> 14 -> 7 conv2_flat = tf.reshape(conv2_pool,

[-1, 7*7*64])full_1 = tf.nn.relu(full_layer(conv2_flat, 1024))keep_prob = tf

.placeholder(tf.float32)full1_drop = tf.nn.dropout(full_1,

keep_prob=keep_prob)y_conv = full_layer(full1_drop, 10)cross_entropy = tf

.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_conv, labels=y_))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)correct_prediction = tf

.equal(tf.argmax(y_conv, 1), tf.argmax(y_, 1))accuracy = tf

.reduce_mean(tf.cast(correct_prediction, tf.float32))with tf.Session() as sess:

sess.run(tf.global_variables_initializer()) for i in range(STEPS):

batch = mnist.train.next_batch(MINIBATCH_SIZE) if i % 100 == 0:

train_accuracy = sess.run(accuracy, feed_dict={x: batch[0], y_: batch[1],

keep_prob: 1.0}) print("step {}, training accuracy {}".format(i, train_accuracy))

sess.run(train_step, feed_dict={x: batch[0], y_: batch[1], keep_prob: 0.5})

# 10 blocks of 1000 images each. 784(28*28) X = mnist.test.images.reshape(10, 1000, 784)

Y = mnist.test.labels.reshape(10, 1000, 10) test_accuracy = np.mean(

[sess.run(accuracy, feed_dict={x: X[i], y_: Y[i], keep_prob: 1.0}) for i in range(10)])

print("test accuracy: {}".format(test_accuracy))1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.

20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.

52.53.54.55.56.57.58.59.60.

2018-07-10 17:19:02.125927: I tensorflow/core/platform/cpu_feature_guard.cc:137]

Your CPU supports instructions that this TensorFlow binary was not compiled to use:

SSE4.1 SSE4.2 AVX AVX2 FMA2018-07-10 17:19:02.197368:

I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:892]

successful NUMA node read from SysFS had negative value (-1),

but there must be at least one NUMA node, so returning NUMA

node zero2018-07-10 17:19:02.197689: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1030]

Found device 0 with properties:name: GeForce GTX TITAN X major: 5 minor:

2 memoryClockRate(GHz): 1.076pciBusID: 0000:01:00.0totalMemory:

11.92GiB freeMemory: 11.66GiB2018-07-10 17:19:02.197705:

I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]

Creating TensorFlow device (/device:GPU:0) -> (device: 0,

name: GeForce GTX TITAN X, pci bus id: 0000:01:00.0, compute

capability: 5.2)step 0, training accuracy 0.219999998808step 100,

training accuracy 0.699999988079step 200, training accuracy 0.759999990463step 300,

training accuracy 0.920000016689step 400, training accuracy 0.879999995232step 500,

training accuracy 0.939999997616step 600, training accuracy 0.939999997616step 700,

training accuracy 0.980000019073step 800, training accuracy 0.980000019073step 900,

training accuracy 0.939999997616step 1000, training accuracy 0.939999997616step 1100,

training accuracy 0.939999997616step 1200, training accuracy 0.980000019073step 1300,

training accuracy 0.959999978542step 1400, training accuracy 0.959999978542step 1500,

training accuracy 0.959999978542step 1600, training accuracy 0.959999978542step 1700,

training accuracy 0.939999997616step 1800, training accuracy 0.980000019073step 1900,

training accuracy 1.0step 2000, training accuracy 1.0step 2100, training accuracy 1.0step 2200,

training accuracy 0.980000019073step 2300, training accuracy 0.939999997616step 2400,

training accuracy 0.980000019073step 2500, training accuracy 0.980000019073step 2600,

training accuracy 1.0step 2700, training accuracy 0.980000019073step 2800,

training accuracy 0.959999978542step 2900, training accuracy 0.980000019073step 3000,

training accuracy 0.959999978542step 3100, training accuracy 1.0step 3200,

training accuracy 0.980000019073step 3300, training accuracy 1.0step 3400,

training accuracy 1.0step 3500, training accuracy 0.959999978542step 3600,

training accuracy 0.980000019073step 3700, training accuracy 1.0step 3800,

training accuracy 0.959999978542step 3900, training accuracy 0.980000019073step 4000,

training accuracy 0.959999978542step 4100, training accuracy 0.980000019073step 4200,

training accuracy 0.980000019073step 4300, training accuracy 1.0step 4400,

training accuracy 0.980000019073step 4500, training accuracy 0.939999997616step 4600,

training accuracy 1.0step 4700, training accuracy 0.980000019073step 4800,

training accuracy 0.959999978542step 4900,

training accuracy 1.0test accuracy: 0.9869999885561.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.

19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.

51.52.53.54.55.56.57.58.免责声明:本文系网络转载或改编,未找到原创作者,版权归原作者所有。如涉及版权,请联系删