本篇文章主要依托于官方demo,在官网demo上进行修改来体现如何在一个常规的app上加入深度学习的模型。因为对于在app中加入对应的模型也只是将app搜集的数据导入模型并进行处理,处理完之后将结果返回给app并进行后面的操作。其中只有处理的过程会涉及tensorflow,而本文主要介绍tensorflow处理的过程。所以需要依附于具体的app。

一、环境准备

要想在安卓手机上运行首先需要在app上有对应的tensorflow环境。

二、模型准备。

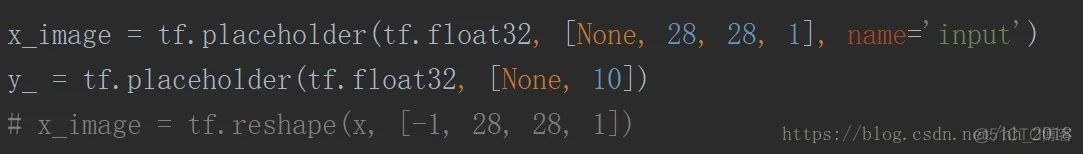

在训练Mnist模型的过程中增加输入节点的名字以及将模型存为.pb文件。 其中对节点输入名字主要是为了在调用时可以通过参数的名字指定需要传入和输出的节点张量。另外需要注意模型的输入过程找中所有用占位符定义的变量都是需要定义对应的变量名字。因为所有占位符的变量均是在feed的时候传入的值,如果不定义名字无法在使用时为其传入值。此时模型的调用会报缺少东西。

例如:我在构造模型时对keep_prod定义了一个32位的float型占位符。但是没有对其命名。在调用pb文件时报缺少一个32位float型数字(由于调试过程没有截图就不呈现具体的报错内容了)。

构建pb文件:构建pb文件的过程主要涉及代码,直接以代码说明。原始的MNINST训练代码见我前面的博客( MNIST原始代码),具体更改部分如下:

将上述四副截图分别按1,2,3,4的顺序排列起来解释如下:

1:主要定义输入,由于我们输入自己的任意的一个图片打开之后是一个矩阵的形式,因此直接以一个28x28x1的tensor作为输入,不以784维的一维向量作为输入。采用这种输入方式对于一个图片只需要将其变为28x28大小并将其变为灰度就可以了。

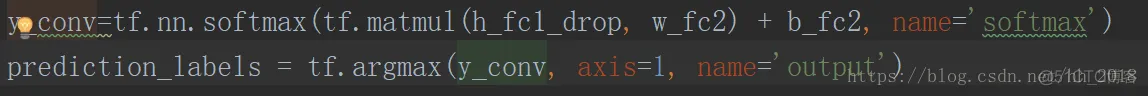

2. 定义了softmax和output,其中一个输出的是各个值的概率,一个是最后的值。可以根据需求自己调用。

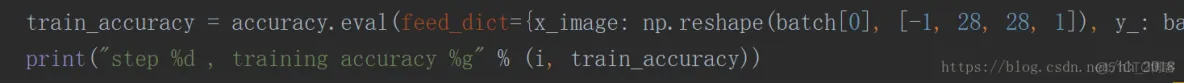

3. 由于输入是28x28x1的张量,所以对模型输入的形式进行了修改。

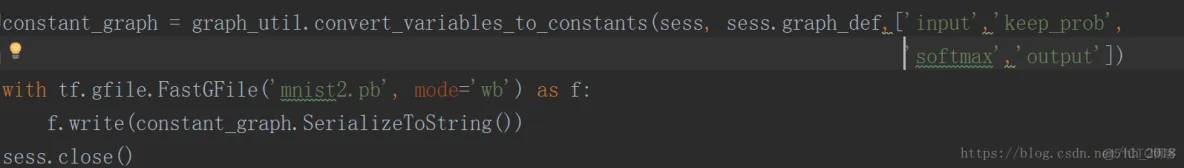

4. 将整个结果保存成pb文件。

三、模型调用

首先需要把pb文件放在assets下面,并新建一个txt文件,里面从0-9表示标签。然后增加一个类(主要是构建MNIST的模型),具体如下:

package org.tensorflow.demo;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

import android.os.Trace;

import android.util.Log;

import org.tensorflow.Operation;

import org.tensorflow.contrib.android.TensorFlowInferenceInterface;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.List;

import java.util.PriorityQueue;

import java.util.Vector;

/** A classifier specialized to label images using TensorFlow. */

public class TensorFlowMnistClassifier implements Classifier {

private static final String TAG = "TensorFlowImageClassifier";

// Only return this many results with at least this confidence.

private static final int MAX_RESULTS = 10;

private static final float THRESHOLD = 0.1f;

// Config values.

private String inputName;

private String outputName;

private String keep_pro;

private int inputSize;

//private int numClass;

// Pre-allocated buffers.

private Vector<String> labels = new Vector<String>();

private int[] intValues;

private float[] floatValues;

private float[] floatKeep;

private float[] outputs;

private String[] outputNames;

private boolean logStats = false;

private TensorFlowInferenceInterface inferenceInterface;

private TensorFlowMnistClassifier() {}

/**

* Initializes a native TensorFlow session for classifying images.

*

* @param assetManager The asset manager to be used to load assets.

* @param modelFilename The filepath of the model GraphDef protocol buffer.

* @param labelFilename The filepath of label file for classes.

* @param inputSize The input size. A square image of inputSize x inputSize is assumed.

* @param inputName The label of the image input node.

* @param outputName The label of the output node.

* @throws IOException

*/

public static Classifier create(

AssetManager assetManager,

String modelFilename,

String labelFilename,

int inputSize,

String inputName,

String outputName,

int numClass) {

TensorFlowMnistClassifier c = new TensorFlowMnistClassifier();

c.inputName = inputName;

c.outputName = outputName;

// Read the label names into memory.

// TODO(andrewharp): make this handle non-assets.

String actualFilename = labelFilename.split("file:///android_asset/")[1];

Log.i(TAG, "Reading labels from: " + actualFilename);

BufferedReader br = null;

try {

br = new BufferedReader(new InputStreamReader(assetManager.open(actualFilename)));

String line;

while ((line = br.readLine()) != null) {

c.labels.add(line);

}

br.close();

} catch (IOException e) {

throw new RuntimeException("Problem reading label file!" , e);

}

c.inferenceInterface = new TensorFlowInferenceInterface(assetManager, modelFilename);

c.inputSize = inputSize;

//c.numClass = numClass;

c.keep_pro = "keep_prob";

// Pre-allocate buffers.

c.outputNames = new String[] {outputName};

c.intValues = new int[inputSize * inputSize];

c.floatValues = new float[inputSize * inputSize];

c.floatKeep = new float[1];

c.outputs = new float[numClass];

return c;

}

@Override

public List<Recognition> recognizeImage(final Bitmap bitmap) {

// Log this method so that it can be analyzed with systrace.

Trace.beginSection("recognizeImage");

Trace.beginSection("preprocessBitmap");

// Preprocess the image data from 0-255 int to normalized float based

// on the provided parameters.

bitmap.getPixels(intValues, 0, bitmap.getWidth(), 0, 0, bitmap

.getWidth(), bitmap.getHeight());

for (int i = 0; i < intValues.length; ++i) {

final int val = intValues[i];

//对输入的图片进行灰化处理

final int r = (val >> 16) & 0xff;

final int g = (val >> 8) & 0xff;

final int b = val & 0xff;

floatValues[i]=(float) (0.3 * r + 0.59 * g + 0.11 * b);

}

Trace.endSection();

floatKeep[0] = (float)1.0;

// Copy the input data into TensorFlow.向图中输入数据

Trace.beginSection("feed");

inferenceInterface.feed(inputName, floatValues, 1, inputSize, inputSize, 1);

//inferenceInterface.feed(keep_pro, floatKeep,1);

Trace.endSection();

// Run the inference call.运行出需要的结果

Trace.beginSection("run");

inferenceInterface.run(outputNames, logStats);

Trace.endSection();

// Copy the output Tensor back into the output array.将结果拿出来并进行存储

Trace.beginSection("fetch");

inferenceInterface.fetch(outputName, outputs);

Trace.endSection();

// Find the best classifications.

PriorityQueue<Recognition> pq =

new PriorityQueue<Recognition>(

10,

new Comparator<Recognition>() {

@Override

public int compare(Recognition lhs, Recognition rhs) {

// Intentionally reversed to put high confidence at the head of the queue.

return Float.compare(rhs.getConfidence(), lhs.getConfidence());

}

});

for (int i = 0; i < outputs.length; ++i) {

if (outputs[i] > THRESHOLD) {

pq.add(

new Recognition(

"" + i, labels.size() > i ? labels.get(i) : "unknown", outputs[i], null));

}

}

final ArrayList<Recognition> recognitions = new ArrayList<Recognition>();

int recognitionsSize = Math.min(pq.size(), MAX_RESULTS);

for (int i = 0; i < recognitionsSize; ++i) {

recognitions.add(pq.poll());

}

Trace.endSection(); // "recognizeImage"

return recognitions;

}

@Override

public void enableStatLogging(boolean logStats) {

this.logStats = logStats;

}

@Override

public String getStatString() {

return inferenceInterface.getStatString();

}

@Override

public void close() {

inferenceInterface.close();

}

}

对于如何调用这个类代码如下:

package org.tensorflow.demo;

import android.graphics.Bitmap;

import android.graphics.Bitmap.Config;

import android.graphics.Canvas;

import android.graphics.Matrix;

import android.graphics.Paint;

import android.graphics.Typeface;

import android.media.ImageReader.OnImageAvailableListener;

import android.os.SystemClock;

import android.util.Size;

import android.util.TypedValue;

import org.tensorflow.demo.OverlayView.DrawCallback;

import org.tensorflow.demo.env.BorderedText;

import org.tensorflow.demo.env.ImageUtils;

import org.tensorflow.demo.env.Logger;

import java.util.List;

import java.util.Vector;

public class MnistActivity extends CameraActivity implements OnImageAvailableListener {

private static final Logger LOGGER = new Logger();

protected static final boolean SAVE_PREVIEW_BITMAP = false;

private ResultsView resultsView;

private Bitmap rgbFrameBitmap = null;

private Bitmap croppedBitmap = null;

private Bitmap cropCopyBitmap = null;

private long lastProcessingTimeMs;

private static final int INPUT_SIZE = 28;

private static final String INPUT_NAME = "input";

private static final String OUTPUT_NAME = "softmax";

private static final int NUM_CLASS = 10;

private static final String MODEL_FILE = "file:///android_asset/mnist.pb";

private static final String LABEL_FILE =

"file:///android_asset/mnist.txt";

private static final boolean MAINTAIN_ASPECT = true;

private static final Size DESIRED_PREVIEW_SIZE = new Size(640, 480);

private Integer sensorOrientation;

private Classifier classifier;

private Matrix frameToCropTransform;

private Matrix cropToFrameTransform;

private BorderedText borderedText;

@Override

protected int getLayoutId() {

return R.layout.camera_connection_fragment;

}

@Override

protected Size getDesiredPreviewFrameSize() {

return DESIRED_PREVIEW_SIZE;

}

private static final float TEXT_SIZE_DIP = 10;

@Override

public void onPreviewSizeChosen(final Size size, final int rotation) {

final float textSizePx = TypedValue.applyDimension(

TypedValue.COMPLEX_UNIT_DIP, TEXT_SIZE_DIP, getResources().getDisplayMetrics());

borderedText = new BorderedText(textSizePx);

borderedText.setTypeface(Typeface.MONOSPACE);

classifier =

TensorFlowMnistClassifier.create(

getAssets(),

MODEL_FILE,

LABEL_FILE,

INPUT_SIZE,

INPUT_NAME,

OUTPUT_NAME,

NUM_CLASS);

previewWidth = size.getWidth();

previewHeight = size.getHeight();

sensorOrientation = rotation - getScreenOrientation();

LOGGER.i("Camera orientation relative to screen canvas: %d", sensorOrientation);

LOGGER.i("Initializing at size %dx%d", previewWidth, previewHeight);

rgbFrameBitmap = Bitmap.createBitmap(previewWidth, previewHeight, Config.ARGB_8888);

croppedBitmap = Bitmap.createBitmap(INPUT_SIZE, INPUT_SIZE, Config.ARGB_8888);

frameToCropTransform = ImageUtils.getTransformationMatrix(

previewWidth, previewHeight,

INPUT_SIZE, INPUT_SIZE,

sensorOrientation, MAINTAIN_ASPECT);

cropToFrameTransform = new Matrix();

frameToCropTransform.invert(cropToFrameTransform);

addCallback(

new DrawCallback() {

@Override

public void drawCallback(final Canvas canvas) {

renderDebug(canvas);

}

});

}

@Override

protected void processImage() {

rgbFrameBitmap.setPixels(getRgbBytes(), 0, previewWidth, 0, 0, previewWidth, previewHeight);

final Canvas canvas = new Canvas(croppedBitmap);

canvas.drawBitmap(rgbFrameBitmap, frameToCropTransform, null);

// For examining the actual TF input.

if (SAVE_PREVIEW_BITMAP) {

ImageUtils.saveBitmap(croppedBitmap);

}

runInBackground(

new Runnable() {

@Override

public void run() {

final long startTime = SystemClock.uptimeMillis();

final List<Classifier.Recognition> results = classifier

.recognizeImage(croppedBitmap);

lastProcessingTimeMs = SystemClock.uptimeMillis() - startTime;

LOGGER.i("Detect: %s", results);

cropCopyBitmap = Bitmap.createBitmap(croppedBitmap);

if (resultsView == null) {

resultsView = (ResultsView) findViewById(R.id.results);

}

resultsView.setResults(results);

requestRender();

readyForNextImage();

}

});

}

@Override

public void onSetDebug(boolean debug) {

classifier.enableStatLogging(debug);

}

private void renderDebug(final Canvas canvas) {

if (!isDebug()) {

return;

}

final Bitmap copy = cropCopyBitmap;

if (copy != null) {

final Matrix matrix = new Matrix();

final float scaleFactor = 2;

matrix.postScale(scaleFactor, scaleFactor);

matrix.postTranslate(

canvas.getWidth() - copy.getWidth() * scaleFactor,

canvas.getHeight() - copy.getHeight() * scaleFactor);

canvas.drawBitmap(copy, matrix, new Paint());

final Vector<String> lines = new Vector<String>();

if (classifier != null) {

String statString = classifier.getStatString();

String[] statLines = statString.split("\n");

for (String line : statLines) {

lines.add(line);

}

}

lines.add("Frame: " + previewWidth + "x" + previewHeight);

lines.add("Crop: " + copy.getWidth() + "x" + copy.getHeight());

lines.add("View: " + canvas.getWidth() + "x" + canvas.getHeight());

lines.add("Rotation: " + sensorOrientation);

lines.add("Inference time: " + lastProcessingTimeMs + "ms");

borderedText.drawLines(canvas, 10, canvas.getHeight() - 10, lines);

}

}

}

另外在AndroidMainfest.xml中添加

<activity android:name="org.tensorflow.demo.MnistActivity"

android:screenOrientation="portrait"

android:label="@string/activity_name_mnist">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

<category android:name="android.intent.category.LEANBACK_LAUNCHER" />

</intent-filter>

</activity>

最后运行整个工程,生成的apk中就包括TF Mnist的图标以及对应的功能。

四、注意:

在上述代码中主要更改的是模型文件,构造的时候对输入数据的处理过程,以及输出的数据。对于每一个模型来说,模型的构建以及运行都是一样的,对于移植的时候主要是考虑运行的时候输入的数据格式是不是和自己需求的一样即可、输出的是什么数据、什么形式。至于输出数据之后的处理过程是根据具体的业务需求具体来实现的。

免责声明:本文系网络转载或改编,未找到原创作者,版权归原作者所有。如涉及版权,请联系删