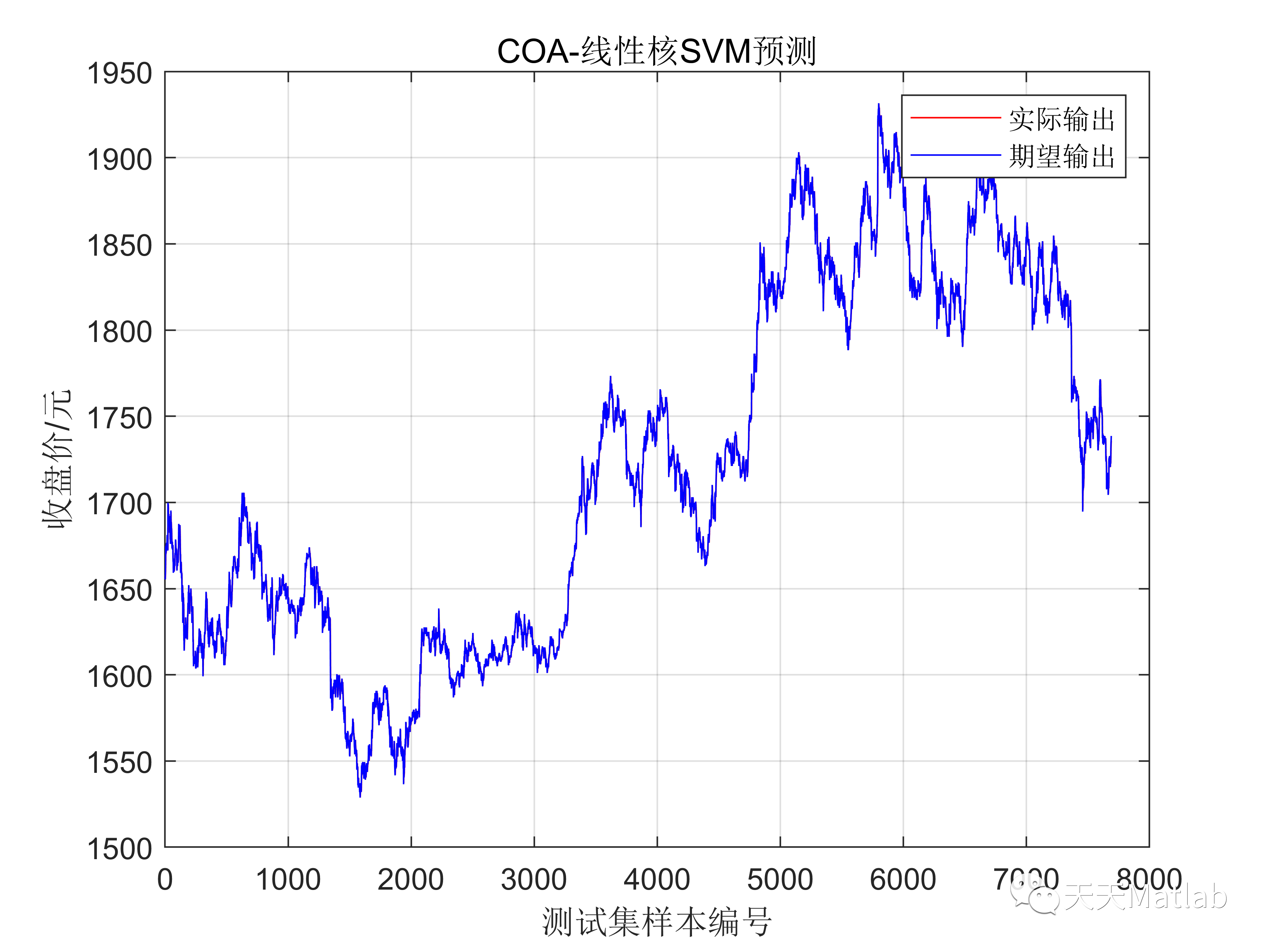

提出一种基于郊狼优化算法(COA)和支持向量机(SVM)的股价预测方法.针对SVM预测模型参数难以确定的问题,采用COA算法对SVM中惩罚因子及核函数参数进行优化,构建COA-SVM股价预测模型。

支持向量机是利用已知数据类别的样本为训练样本,寻找同类数据的空间聚集特征,从而对测试样本进行分类验证,通过验证可将分类错误的数据进行更正。本文以体检数据为数据背景,首先通过利用因子分析将高维数据进行降维,由此将所有指标整合成几个综合性指标;为降低指标之间的衡量标准所引起的误差,本文利用 MATLAB软件将数据进行归一化处理,结合聚类分析将数据分类;最后本文利用最小二乘支持向量机分类算法进行分类验证,从而计算出数据分类的准确率,并验证了数据分类的准确性和合理性。

function [fbst, xbst, performance] = hho( objective, d, lmt, n, T, S)%Harris hawks optimization algorithm% inputs: % objective - function handle, the objective function% d - scalar, dimension of the optimization problem% lmt - d-by-2 matrix, lower and upper constraints of the decision varable% n - scalar, swarm size% T - scalar, maximum iteration% S - scalar, times of independent runs% data: 2021-05-09% author: elkman, github.com/ElkmanY/%% Levy flightbeta = 1.5;sigma = ( gamma(1+beta)*sin(pi*beta/2)/gamma((1+beta)/2)*beta*2^((beta-1)/2) ).^(1/beta);Levy = @(x) 0.01*normrnd(0,1,d,x)*sigma./abs(normrnd(0,1,d,x)).^(1/beta);%% algorithm proceduretic;for s = 1:S %% Initialization X = lmt(:,1) + (lmt(:,2) - lmt(:,1)).*rand(d,n); for t = 1:T F = objective(X); [f_rabbit(s,t), i_rabbit] = min(F); x_rabbit(:,t,s) = X(:,i_rabbit); xr = x_rabbit(:,t,s); J = 2*(1-rand(d,1)); E0 = 2*rand(1,n)-1; E(t,:) = 2*E0*(1-t/T); absE = abs(E(t)); p1 = absE>=1; %eq(1) r = rand(1,n); p2 = (r>=0.5) & (absE>=0.5) & (absE<1); %eq(4) p3 = (r>=0.5) & (absE<0.5); %eq(6) p4 = (r<0.5) & (absE>=0.5) & (absE<1); %eq(10) p5 = (r<0.5) & (absE<0.5); %eq(11) %% update locations rh = randi([1,n],1,n); flag1 = rand(1,n)>=0.5; Y = xr - E(t,:).*abs( J.*xr - X ); Z = Y + rand(d,n).*Levy(n); flag2 = (objective(Y)<objective(Z)) & (objective(Y)<F); flag3 = (objective(Y)>objective(Z)) & (objective(Z)<F); flag4 = (~flag2) & (~flag3); X_ = p1.*( (X(:,rh) - rand(1,n).*abs( X(:,rh) - 2*rand(1,n).*X )).*flag1 +... ((X(:,rh) - mean(X)) - rand(1,n).*( lmt(:,1) + (lmt(:,2) - lmt(:,1)).*rand(d,n) )).*(~flag1) )... + p2.*( xr - X - E(t,:).*abs( J.*xr - X ) )... + p3.*( xr - E(t,:).*abs( xr - X ) )... + p4.*( Y.*flag2 + Z.*flag3 + ( lmt(:,1) + (lmt(:,2) - lmt(:,1)).*rand(d,n) ).*flag4 )... + p5.*( Y.*flag2 + Z.*flag3 + ( lmt(:,1) + (lmt(:,2) - lmt(:,1)).*rand(d,n) ).*flag4 ); X_(:,i_rabbit) = xr; X = X_; endend%% Êä³ö-outputsperformance = [min(f_rabbit(:,T));mean(f_rabbit(:,T));std(f_rabbit(:,T))];timecost = toc;[fbst, ibst] = min(f_rabbit(:,T));xbst = x_rabbit(:,T,ibst);%% »æͼ-plot data% Convergence Curvefigure('Name','Convergence Curve');box onsemilogy(1:T,mean(f_rabbit,1),'b','LineWidth',1.5);xlabel('Iteration','FontName','Aril');ylabel('Fitness/Score','FontName','Aril');title('Convergence Curve','FontName','Aril');if d == 2 % Trajectory of Global Optimal figure('Name','Trajectory of Global Optimal'); x1 = linspace(lmt(1,1),lmt(1,2)); x2 = linspace(lmt(2,1),lmt(2,2)); [X1,X2] = meshgrid(x1,x2); V = reshape(objective([X1(:),X2(:)]'),[size(X1,1),size(X1,1)]); contour(X1,X2,log10(V),100); % notice log10(V) hold on plot(x_rabbit(1,:,1),x_rabbit(2,:,1),'r-x','LineWidth',1); hold off xlabel('\it{x}_1','FontName','Time New Roman'); ylabel('\it{x}_2','FontName','Time New Roman'); title('Trajectory of Global Optimal','FontName','Aril');endend

免责声明:本文系网络转载或改编,未找到原创作者,版权归原作者所有。如涉及版权,请联系删