YOLO发展至YOLOv3时,基本上这个系列都达到了一个高潮阶段,很多实际任务中,都会见到YOLOv3的身上,而对于较为简单和场景,比如没有太密集的目标和极端小的目标,多数时候仅用YOLOv2即可。除了YOLO系列,也还有其他很多优秀的工作,比如结构同样简洁的RetinaNet和SSD。后者SSD其实也会常在实际任务中见到,只不过就性能而言,要略差于YOLOv3,当然,这也是因为SSD并没有去做后续的升级,反倒很多新工作如RFB-Net、DSSD等工作都将其作为baseline。论性能,RetinaNet当然是不输于YOLOv3的,只是,相较于YOLOv3,RetinaNet的一个较为致命的问题就是:速度太慢。而这一个问题的主要原因就是RetinaNet使用较大的输出图像尺寸和较重的检测头。

yolov4的创新点

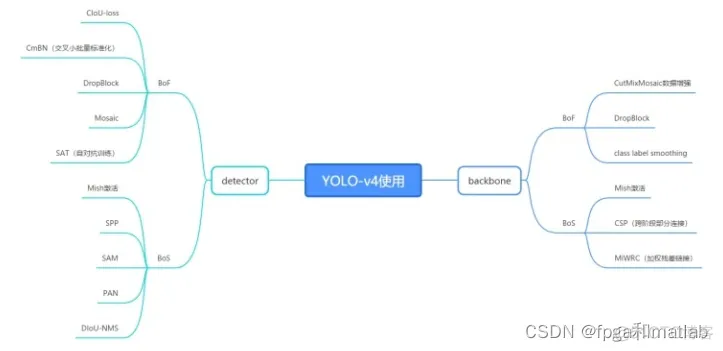

1.输入端的创新点:训练时对输入端的改进,主要包括Mosaic数据增强、cmBN、SAT自对抗训练

2.BackBone主干网络:各种方法技巧结合起来,包括:CSPDarknet53、Mish激活函数、Dropblock

3.Neck:目标检测网络在BackBone和最后的输出层之间往往会插入一些层,比如Yolov4中的SPP模块、FPN+PAN结构

4.Head:输出层的锚框机制和Yolov3相同,主要改进的是训练时的回归框位置损失函数CIOU_Loss,以及预测框筛选的nms变为DIOU_nms

通俗的讲,就是说这个YOLO-v4算法是在原有YOLO目标检测架构的基础上,采用了近些年CNN领域中最优秀的优化策略,从数据处理、主干网络、网络训练、激活函数、损失函数等各个方面都有着不同程度的优化,虽没有理论上的创新,但是会受到许许多多的工程师的欢迎,各种优化算法的尝试。文章如同于目标检测的trick综述,效果达到了实现FPS与Precision平衡的目标检测 new baseline。

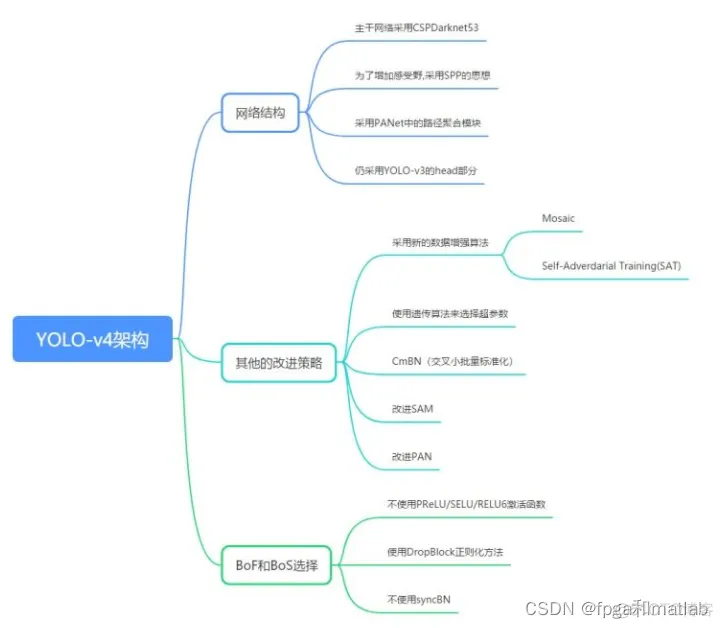

yolov4 网络结构的采用的算法,其中保留了yolov3的head部分,修改了主干网络为CSPDarknet53,同时采用了SPP(空间金字塔池化)的思想来扩大感受野,PANet作为neck部分。

yolov4在技术处理的思维导图:

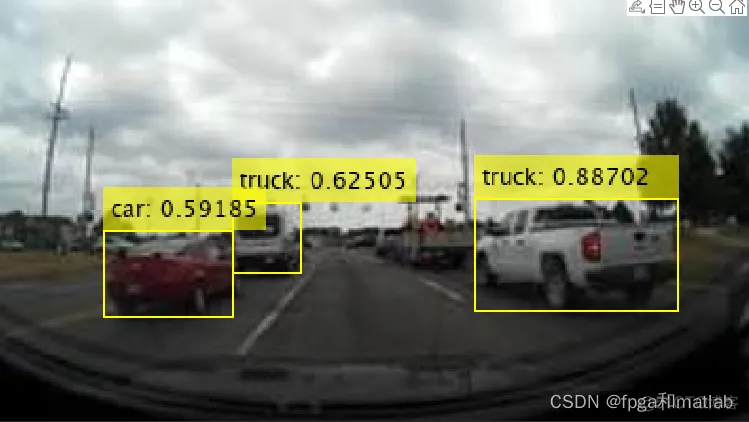

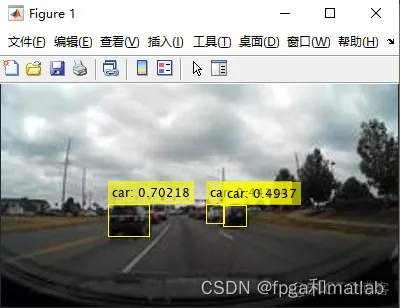

clc;clear;close all;warning off;addpath(genpath(pwd));%****************************************************************************%matlab/FPGA项目开发合作%****************************************************************************%% Download Pretrained Network% Set the modelName from the above ones to download that pretrained model.modelName = 'YOLOv4-coco';model = helper.downloadPretrainedYOLOv4(modelName);net = model.net;%% Load Data% Unzip the vehicle images and load the vehicle ground truth data. unzip vehicleDatasetImages.zipdata = load('vehicleDatasetGroundTruth.mat');vehicleDataset = data.vehicleDataset;% Add the full path to the local vehicle data folder.vehicleDataset.imageFilename = fullfile(pwd, vehicleDataset.imageFilename);rng('default')shuffledIndices = randperm(height(vehicleDataset));idx = floor(0.6 * length(shuffledIndices));trainingDataTbl = vehicleDataset(shuffledIndices(1:idx), :);testDataTbl = vehicleDataset(shuffledIndices(idx+1:end), :);% Create an image datastore for loading the images.imdsTrain = imageDatastore(trainingDataTbl.imageFilename);imdsTest = imageDatastore(testDataTbl.imageFilename); % Create a datastore for the ground truth bounding boxes.bldsTrain = boxLabelDatastore(trainingDataTbl(:, 2:end));bldsTest = boxLabelDatastore(testDataTbl(:, 2:end));% Combine the image and box label datastores.trainingData = combine(imdsTrain, bldsTrain);testData = combine(imdsTest, bldsTest);helper.validateInputData(trainingData);helper.validateInputData(testData);%% Data AugmentationaugmentedTrainingData = transform(trainingData, @helper.augmentData); augmentedData = cell(4,1);for k = 1:4 data = read(augmentedTrainingData); augmentedData{k} = insertShape(data{1,1}, 'Rectangle', data{1,2}); reset(augmentedTrainingData);endfiguremontage(augmentedData, 'BorderSize', 10)%% Preprocess Training Data% Specify the network input size. networkInputSize = net.Layers(1).InputSize; preprocessedTrainingData = transform(augmentedTrainingData, @(data)helper.preprocessData(data, networkInputSize)); % Read the preprocessed training data.data = read(preprocessedTrainingData);% Display the image with the bounding boxes.I = data{1,1};bbox = data{1,2};annotatedImage = insertShape(I, 'Rectangle', bbox);annotatedImage = imresize(annotatedImage,2);figureimshow(annotatedImage)% Reset the datastore.reset(preprocessedTrainingData);%% Modify Pretrained YOLO v4 Networkrng(0)trainingDataForEstimation = transform(trainingData, @(data)helper.preprocessData(data, networkInputSize));numAnchors = 9;[anchorBoxes, meanIoU] = estimateAnchorBoxes(trainingDataForEstimation, numAnchors);% Specify the classNames to be used in the training.classNames = {'vehicle'};[lgraph, networkOutputs, anchorBoxes, anchorBoxMasks] = configureYOLOv4(net, classNames, anchorBoxes, modelName);%% Specify Training OptionsnumEpochs = 90;miniBatchSize = 4;learningRate = 0.001;warmupPeriod = 1000;l2Regularization = 0.001;penaltyThreshold = 0.5;velocity = [];%% Train Modelif canUseParallelPool dispatchInBackground = true;else dispatchInBackground = false;endmbqTrain = minibatchqueue(preprocessedTrainingData, 2,... "MiniBatchSize", miniBatchSize,... "MiniBatchFcn", @(images, boxes, labels) helper.createBatchData(images, boxes, labels, classNames), ... "MiniBatchFormat", ["SSCB", ""],... "DispatchInBackground", dispatchInBackground,... "OutputCast", ["", "double"]);% Convert layer graph to dlnetwork.net = dlnetwork(lgraph);% Create subplots for the learning rate and mini-batch loss.fig = figure;[lossPlotter, learningRatePlotter] = helper.configureTrainingProgressPlotter(fig);iteration = 0;% Custom training loop.for epoch = 1:numEpochs reset(mbqTrain); shuffle(mbqTrain); while(hasdata(mbqTrain)) iteration = iteration + 1; [XTrain, YTrain] = next(mbqTrain); % Evaluate the model gradients and loss using dlfeval and the % modelGradients function. [gradients, state, lossInfo] = dlfeval(@modelGradients, net, XTrain, YTrain, anchorBoxes, anchorBoxMasks, penaltyThreshold, networkOutputs); % Apply L2 regularization. gradients = dlupdate(@(g,w) g + l2Regularization*w, gradients, net.Learnables); % Determine the current learning rate value. currentLR = helper.piecewiseLearningRateWithWarmup(iteration, epoch, learningRate, warmupPeriod, numEpochs); % Update the network learnable parameters using the SGDM optimizer. [net, velocity] = sgdmupdate(net, gradients, velocity, currentLR); % Update the state parameters of dlnetwork. net.State = state; % Display progress. if mod(iteration,10)==1 helper.displayLossInfo(epoch, iteration, currentLR, lossInfo); end % Update training plot with new points. helper.updatePlots(lossPlotter, learningRatePlotter, iteration, currentLR, lossInfo.totalLoss); endend% Save the trained model with the anchors.anchors.anchorBoxes = anchorBoxes;anchors.anchorBoxMasks = anchorBoxMasks;save('yolov4_trained', 'net', 'anchors');%% Evaluate ModelconfidenceThreshold = 0.5;overlapThreshold = 0.5;% Create a table to hold the bounding boxes, scores, and labels returned by% the detector. numImages = size(testDataTbl, 1);results = table('Size', [0 3], ... 'VariableTypes', {'cell','cell','cell'}, ... 'VariableNames', {'Boxes','Scores','Labels'});% Run detector on images in the test set and collect results.reset(testData)while hasdata(testData) % Read the datastore and get the image. data = read(testData); image = data{1}; % Run the detector. executionEnvironment = 'auto'; [bboxes, scores, labels] = detectYOLOv4(net, image, anchors, classNames, executionEnvironment); % Collect the results. tbl = table({bboxes}, {scores}, {labels}, 'VariableNames', {'Boxes','Scores','Labels'}); results = [results; tbl];end% Evaluate the object detector using Average Precision metric.[ap, recall, precision] = evaluateDetectionPrecision(results, testData);% The precision-recall (PR) curve shows how precise a detector is at varying % levels of recall. Ideally, the precision is 1 at all recall levels.% Plot precision-recall curve.figureplot(recall, precision)xlabel('Recall')ylabel('Precision')grid ontitle(sprintf('Average Precision = %.2f', ap))%% Detect Objects Using Trained YOLO v4reset(testData)data = read(testData);% Get the image.I = data{1};% Run the detector.executionEnvironment = 'auto';[bboxes, scores, labels] = detectYOLOv4(net, I, anchors, classNames, executionEnvironment);% Display the detections on image.if ~isempty(scores) I = insertObjectAnnotation(I, 'rectangle', bboxes, scores);endfigureimshow(I)1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.61.62.63.64.65.66.67.68.69.70.71.72.73.74.75.76.77.78.79.80.81.82.83.84.85.86.87.88.89.90.91.92.93.94.95.96.97.98.99.100.101.102.103.104.105.106.107.108.109.110.111.112.113.114.115.116.117.118.119.120.121.122.123.124.125.126.127.128.129.130.131.132.133.134.135.136.137.138.139.140.141.142.143.144.145.146.147.148.149.150.151.152.153.154.155.156.157.158.159.160.161.162.163.164.165.166.167.168.169.170.171.172.173.174.175.176.177.178.179.180.181.182.183.184.185.186.187.188.189.190.191.192.193.194.195.196.197.198.199.200.201.202.203.204.205.206.207.208.209.210.211.212.213.214.215.216.217.218.219.220.221.222.223.224.225.226.227.

免责声明:本文系网络转载或改编,未找到原创作者,版权归原作者所有。如涉及版权,请联系删