产品

本文主要基于 OpenCV 和 MediaPipe 实现物体的虚拟拖放,最终效果如下:

OpenCV 实现虚拟方块拖放

主要分为下面几个步骤

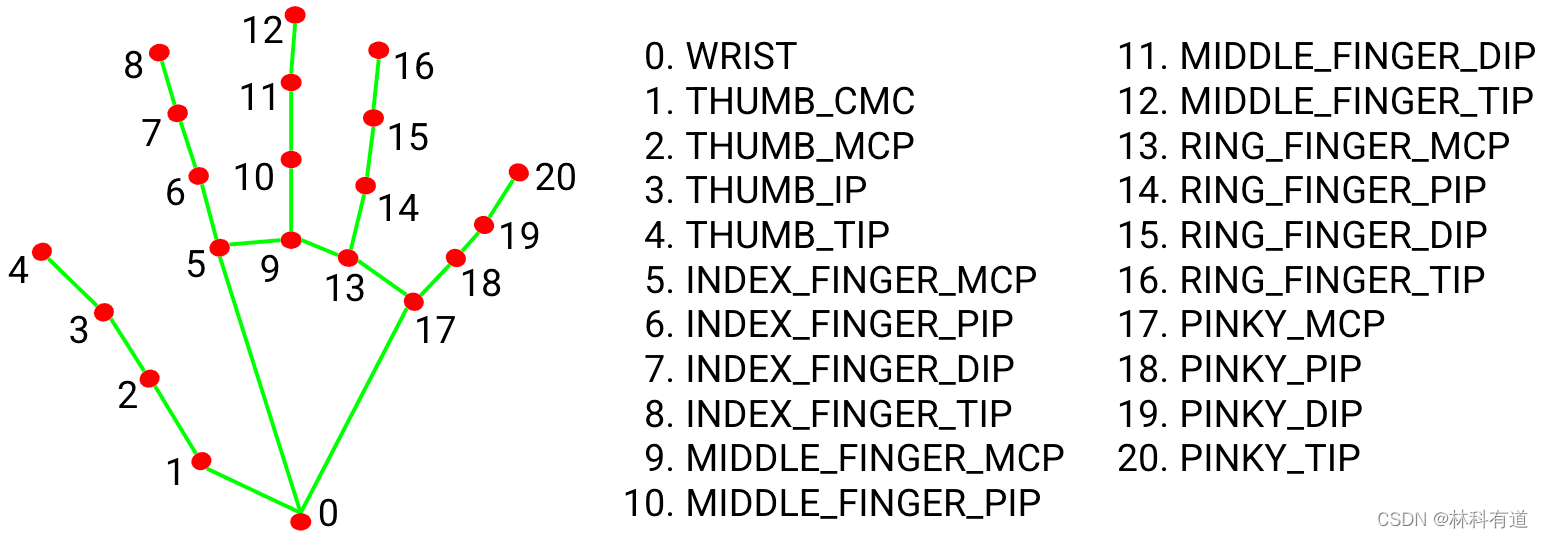

手势检测我们主要是用 MediaPipe 中的手势检测,其输出为20个关键点的位置坐标(x, y, z),输出关键点信息如下图:

代码如下:

# handTrackingModule.py

import cv2

import mediapipe as mp

import math

from mediapipe.python.solutions.drawing_utils import DrawingSpec

class HandDetector:

def __init__(self, static_image_mode=False, maxHands=2, detectionCon=0.5, minTrackCon=0.5):

"""

:param static_image_mode: 静态模式检测会慢一些

:param maxHands: 最大手检测数量

:param detectionCon: 最小检测阈值

:param minTrackCon: 最小跟踪阈值

""" self.static_image_mode = static_image_mode

self.maxHands = maxHands

self.detectionCon = detectionCon

self.minTrackCon = minTrackCon

self.mpHands = mp.solutions.hands

self.hands = self.mpHands.Hands(static_image_mode=self.static_image_mode, max_num_hands=self.maxHands,

min_detection_confidence=self.detectionCon,

min_tracking_confidence=self.minTrackCon)

self.mpDraw = mp.solutions.drawing_utils

self.tipIds = [4, 8, 12, 16, 20] # 指尖关键点, 分别是大拇指到小指

self.fingers = []

self.lmList = []

self.results = None

def findHands(self, img, draw=True, flip_type=False):

"""

手部检测

""" img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

self.results = self.hands.process(img_rgb)

all_hands = []

h, w, c = img.shape

if self.results.multi_hand_landmarks:

for handType, handLms in zip(self.results.multi_handedness, self.results.multi_hand_landmarks):

my_hand = {}

mylmList = []

xList = []

yList = []

#获取关键点坐标

for id, lm in enumerate(handLms.landmark):

px, py, pz = int(lm.x * w), int(lm.y * h), int(lm.z * w)

mylmList.append([px, py, pz])

xList.append(px)

yList.append(py)

## 获取手部矩形框

xmin, xmax = min(xList), max(xList)

ymin, ymax = min(yList), max(yList)

boxW, boxH = xmax - xmin, ymax - ymin

bbox = xmin, ymin, boxW, boxH

cx, cy = bbox[0] + (bbox[2] // 2), \

bbox[1] + (bbox[3] // 2)

my_hand["lmList"] = mylmList

my_hand["bbox"] = bbox

my_hand["center"] = (cx, cy)

# 判断左右手

if flip_type:

if handType.classification[0].label == "Right":

my_hand["type"] = "Left"

else:

my_hand["type"] = "Right"

else:

my_hand["type"] = handType.classification[0].label

all_hands.append(my_hand)

if draw:

self.mpDraw.draw_landmarks(img, handLms,

self.mpHands.HAND_CONNECTIONS,

connection_drawing_spec=DrawingSpec(color=(255, 255, 0)))

cv2.rectangle(img, (bbox[0] - 20, bbox[1] - 20),

(bbox[0] + bbox[2] + 20, bbox[1] + bbox[3] + 20),

(255, 0, 255), 2)

cv2.putText(img, my_hand["type"], (bbox[0] - 30, bbox[1] - 30), cv2.FONT_HERSHEY_PLAIN,

2, (255, 0, 255), 2)

if draw:

return all_hands, img

else:

return all_hands, None

def findDistance(self, p1, p2, img=None):

"""

求两个关键点的距离

"""

x1, y1 = p1[0], p1[1]

x2, y2 = p2[0], p2[1]

cx, cy = (x1 + x2) // 2, (y1 + y2) // 2

length = math.hypot(x2 - x1, y2 - y1)

info = (x1, y1, x2, y2, cx, cy)

if img is not None:

cv2.circle(img, (x1, y1), 15, (255, 0, 255), cv2.FILLED)

cv2.circle(img, (x2, y2), 15, (255, 0, 255), cv2.FILLED)

cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)

cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)

return length, info, img

else:

return length, info, None

import cv2

from handTrackingModule import HandDetector

import numpy as np

import time

# 打开摄像头

cap = cv2.VideoCapture(2)

cap.set(3, 1280)

cap.set(4, 720)

colorR = (255, 0, 255)

colorB = (255, 0, 0)

detector = HandDetector(detectionCon=0.8)

# 定义方块类

class DragRect():

def __init__(self, posCenter, size=[150, 150]):

self.posCenter = posCenter

self.size = size

self.color = colorR

def update(self, cursor, hit1 = True):

cx, cy = self.posCenter[0], self.posCenter[1]

w, h = self.size

# 如果关键点在方块内部,锁定方块

if cx - w // 2 < cursor[0] < cx + w // 2 and cy - h // 2 < cursor[1] < cy + h // 2:

self.posCenter = cursor

self.color = colorB

else:

self.color = colorR

# 画出方块

rectList = []

for x in range(5):

rectList.append(DragRect([x * 250 + 150, 150]))

prev_time = time.time()

while True:

success, img = cap.read()

img = cv2.flip(img, 1)

lmList, img = detector.findHands(img)

# 检测是否有手

nums = len(lmList)

if nums > 0:

lmList_1 = lmList[0]['lmList']

# 食指和中指的距离

dist, _, _ = detector.findDistance(lmList_1[8], lmList_1[12], img)

if dist < 50:

for rect in rectList:

rect.update(lmList_1[8])

else:

for rect in rectList:

rect.color = colorR

imgNew = np.zeros_like(img, np.uint8)

for rect in rectList:

cx, cy = rect.posCenter[0], rect.posCenter[1]

w, h = rect.size

color = rect.color

cv2.rectangle(imgNew, (cx - w // 2, cy - h // 2),

(cx + w // 2, cy + h // 2), color, cv2.FILLED)

out = img.copy()

alpha = 0.08

mask = imgNew.astype(bool)

out[mask] = cv2.addWeighted(img, alpha, imgNew, 1 - alpha, 0)[mask]

current_time = time.time()

fps = 1 / (current_time - prev_time)

prev_time = current_time

cv2.putText(out, f'FPS: {int(fps)}', (20, 70), cv2.FONT_HERSHEY_PLAIN,

3, (0, 255, 0), 3)

cv2.imshow("hand detect", out)

cv2.waitKey(1)

免责声明:本文系网络转载或改编,未找到原创作者,版权归原作者所有。如涉及版权,请联系删